Introducing AgentEval.org

Introducing AgentEval.org: An Open-Source Benchmarking Initiative for AI Agent Evaluation

Feb 25, 2025

Introducing AgentEval.org: An Open-Source Benchmarking Initiative for AI Agent Evaluation

As large language models (LLMs) become the backbone of applications across industries, it’s becoming increasingly difficult for companies and users to understand what good AI performance looks like. That’s why we’re excited to announce AgentEval.org, the first open-source repository for AI benchmarks — an open, public resource providing datasets, best practices, and assessment methodologies.

Where We’re Starting: Open Benchmarking for the Legal Industry

Today, Agent Eval is an open, community-driven initiative designed to bring together a consortium of researchers, industry leaders, and other stakeholders across the legal domain. While we’re starting by serving researchers, practitioners, and organizations shaping the future of legal AI, our long-term vision is a trusted source for AI benchmarking across multiple industries, including legal, finance, healthcare, and beyond.

Most LLM benchmarks today are outdated and narrowly focused, evaluating general model performance rather than how domain-specific applications built on top of LLMs function in real-world settings. There’s a growing need for benchmarks that assess AI agents in applied contexts, ensuring they meet industry-specific requirements and practical use cases. In the legal industry that means things like case analysis, contract review, and regulatory compliance.

Because some benchmarking efforts often rely on proprietary datasets, closed methodologies, and restricted access, it can be difficult for researchers and developers to reproduce results, compare models fairly, and refine systems. At the same time, we’ve seen successful open evaluation frameworks — from NIST and ISO standards to initiatives like MLCommons, LLMSYS Chatbot Arena and LegalBench — showing that collaborative, open-source approaches lead to better benchmarking practices.

Enter AgentEval.org

Agent Eval is an open and trusted source with the latest benchmarking studies and best practices across specialized domains starting with AI-powered apps used by the legal industry.

Agent Eval brings together the most relevant benchmarking studies from leading institutions like Stanford, Open Compass, and legal AI vendors such as Thomson Reuters and Harvey. Among them:

Stanford LegalBench, a comprehensive benchmark assessing LLMs across 162 legal reasoning tasks crafted by legal experts;

Overruling Dataset (Thomson Reuters), a classification benchmark designed to evaluate LLMs’ ability to detect when legal precedent has been overruled;

BigLaw Bench, a benchmark evaluating LLMs on real-world legal tasks that mirror billable work in law firms.

Together, these studies provide a useful foundation for evaluating AI performance in legal settings.

Who This Helps

Agent Eval benefits a broad set of stakeholders across the AI and legal ecosystems:

Law firms — Gain a clear, standardized way to compare legal AI solutions and select the best tools for their needs.

Legal AI vendors — Understand their performance relative to competitors and improve their models based on objective, industry-standard benchmarks.

Academics & Policymakers — Access insights into how AI systems perform in real-world legal applications, ensuring responsible deployment and regulation.

The broader AI industry — By making benchmarks and best practices freely available, Agent Eval gives smaller startups, research institutions, and independent developers access to the same high-quality evaluation resources as well-funded private companies.

Bringing Transparency and Trust to AI Benchmarking

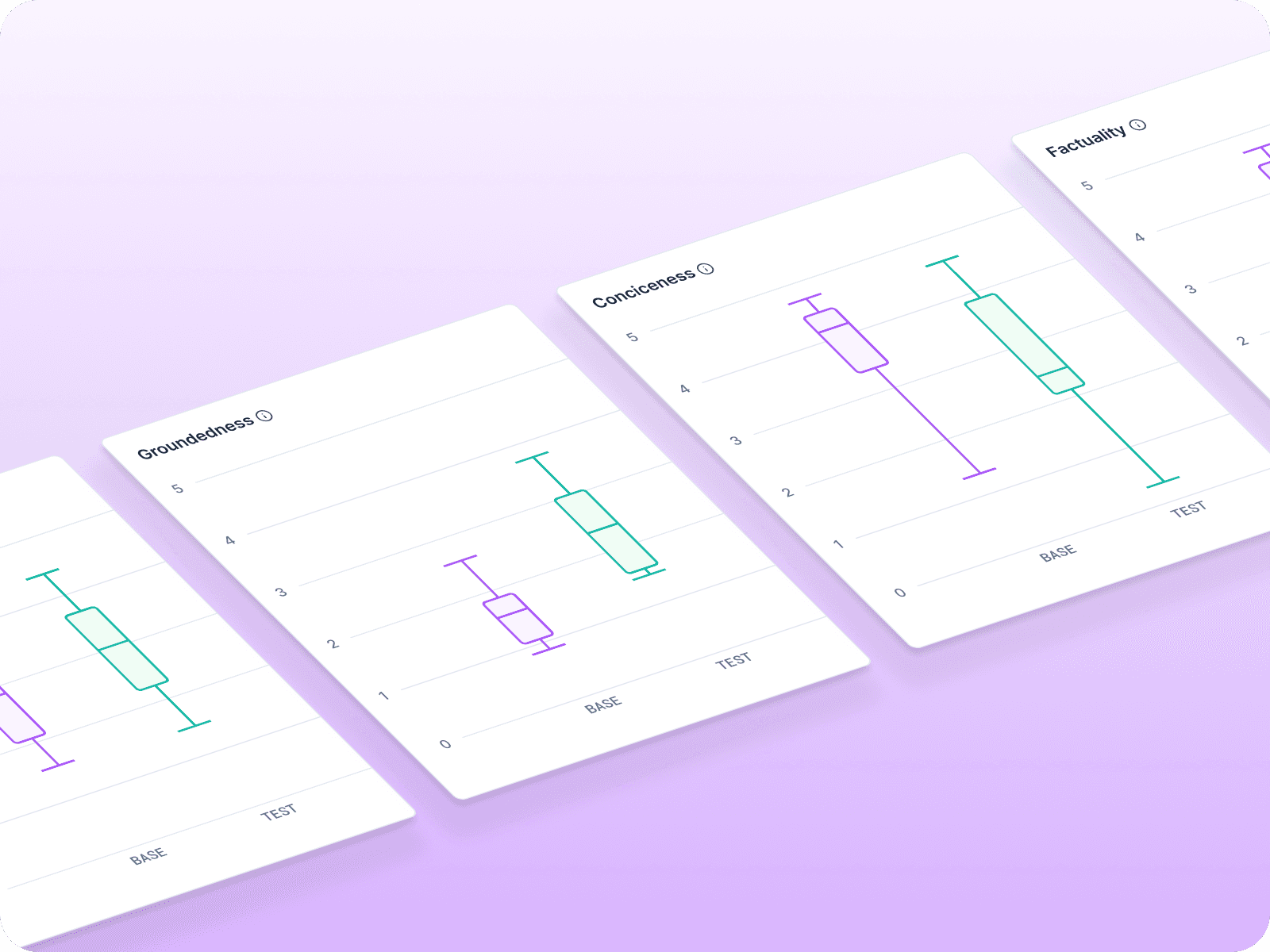

Scorecard is a platform for teams building and evaluating AI products, from initial prototyping and experimentation to preparing AI products and agents for release. We also help with understanding performance in production, identifying issues, and feeding those insights back into experimentation. Clients like Thomson Reuters rely on us to evaluate and refine AI performance across products like Westlaw, CoCounsel Core, and Practical Law. These products remain proprietary and separate from the open source benchmarking initiative.

We’re huge fans of open source standards and approaches and believe it’s essential to drive trust in AI. That’s why we were among the first to adopt OpenTelemetry as part of our AI platform — helping establish standardized observability for generative AI. Our SDKs are already open source, and we’re committed to expanding our contributions over time. By bringing these same principles to AI benchmarking, Agent Eval aims to be a trusted, neutral platform where the industry can align on transparent and standardized evaluation practices.

Join the First Open-Source Repository for AI benchmarks

The standards we set for AI evaluation now will influence its adoption and impact for years to come. AgentEval.org is an open call for collaboration to anyone committed to building better, more transparent AI benchmarks. To get involved:

Join the AgentEval.org working group at [link to join] to receive details about our inaugural meeting on legal agent benchmarks at Stanford this spring

Share this AgentEval resource on LinkedIn with potential contributors [link to share this blog post]