Simulate, Test, Repeat: The Key to Robust AI System Development

Short description goes here

Nov 7, 2024

Before founding Scorecard, CEO Darius Emrani was responsible for evaluation of the self-driving car at Waymo. Waymo — formerly the Google self-driving car project — is an Alphabet subsidiary developing self-driving cars and operating robotaxi services in several U.S. cities. Waymo invested heavily in evaluation systems that use simulation as the primary development tool, enabling rapid iteration and saving billions of dollars by reducing the need for real-world testing.

Simulations are transforming the development and testing of AI systems across industries, far beyond just self-driving cars. In fact, simulated testing is proving to be a game-changer for a wide range of applications. Scorecard is at the forefront of this revolution, empowering its users to harness the power of simulated testing for large language models (LLMs). By providing the tools and expertise needed to evaluate and improve LLMs through simulation, Scorecard is demonstrating that better evaluations can be the key to unlocking significant advancements in AI.

Navigating Complexity: The Shared Challenges of Self-Driving Cars and LLMs

While self-driving cars and LLMs are used in completely different scenarios, both operate in complex, real-world environments with a variety of potential unexpected situations. Creating the training data to accommodate edge cases is a constant challenge in improving either of these systems. Let’s take a look at further similarities between the two:

Both systems (self-driving cars and LLMs) rely on massive datasets to improve performance - either learning from millions of road miles or massive corpora of text. Incomplete or unclear training or testing information sets an AI system up to fail—resulting in fatal accidents, or misinformation, like incorrect diagnoses.

Next, both have a need for contextual decision-making: meaning the number of edge cases and unique perceptions never stop growing. Self-driving cars must consider a range of changing factors, such as road conditions and pedestrian behavior, while LLMs need to understand ever-evolving cultural and linguistic nuances.

Meanwhile, the importance of safety and ethical consideration is also increasing. Lawsuits, new legislation, and media coverage mean that systemic, diligent coverage of all known edge cases is critical before any release.

Simulation Testing: The Secret to Waymo's Success in Self-Driving Car Development

Simulation-based testing put Waymo ahead of its competitors because the virtual world replaced the slow month-long cycle of training on the road and the cost of millions of miles of driving. Eliminating these costly processes, the entire economic equation of the existing development cycle was turned on its head. Waymo cleared the competition and reached the market sooner.

“‘That [training] cycle is tremendously important to us and all the work we’ve done on simulation allows us to shrink it dramatically,’ Dolgov told me. ‘The cycle that would take us weeks in the early days of the program now is on the order of minutes.’”

Dmitri Dolgov, Waymo CEO

Self-driving cars are a prime example of how a model's performance can have far-reaching consequences. When such advanced technology is involved, ensuring its safety and reliability is paramount. Simulation testing plays a crucial role in achieving this goal. By rigorously testing these systems in virtual environments, we can identify and address potential issues before they manifest in the real world. This level of accountability and rigorous development is essential, especially for industries like automotive insurance that are closely tied to self-driving technology.

“Without a robust simulation infrastructure, there is no way you can build [higher levels of autonomy into vehicles]. And I would not engage in conversation with anyone who thinks otherwise.’”

Sunil Chintakindi, Allstate Insurance Head of Innovation

Beyond Accuracy: The Multifaceted Evaluation of LLMs

LLMs must be able to respond effectively to a wide range of linguistic nuances, such as idioms, ambiguous phrasing, or technical jargon. These linguistic edge cases can be particularly challenging for LLMs, as they require a deep understanding of language and context.

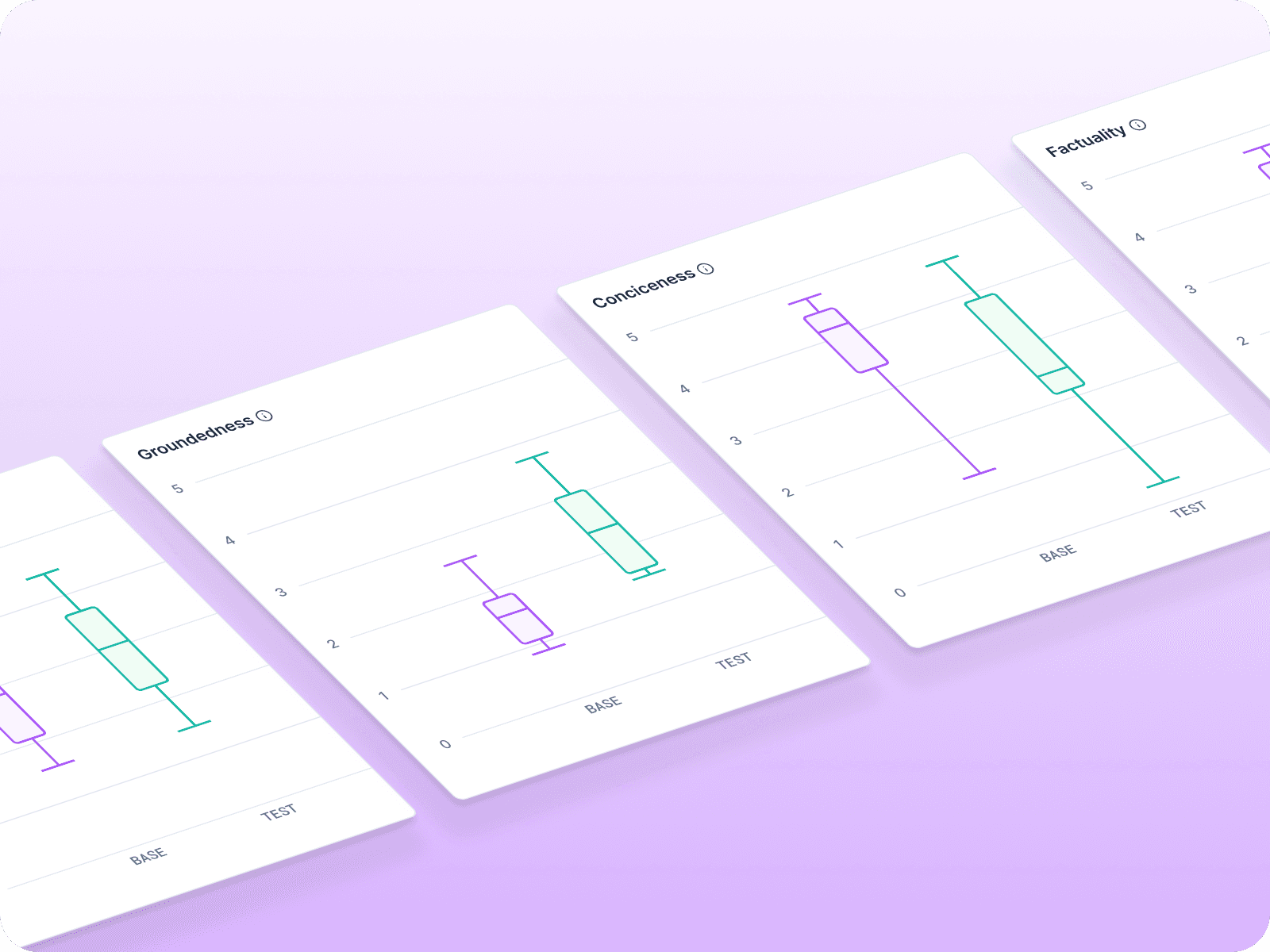

Just like a human language-learner, effective feedback loops are essential here. To evaluate the performance of an LLM system, it's essential to test it thoroughly across multiple dimensions. These dimensions include not only quantitative metrics such as accuracy and efficiency but also qualitative dimensions such as:

Correctness: Does the LLM provide accurate and relevant responses to user queries?

Helpfulness: Does the LLM provide responses that are useful and assistive to the user?

Ethical Compliance: Does the LLM adhere to ethical standards and avoid generating biased or harmful content?

The Role of Scorecard in LLM Evaluation

A comprehensive evaluation framework like Scorecard can help test LLM systems across these dimensions. However, testing alone is not enough. We can draw inspiration from the development of self-driving cars, where simulation-based testing has proven to be a crucial component of ensuring safety and reliability.

Here’s an overview of how parallel challenges can be addressed with simulation-based testing for self-driving cars (left) and evaluations for LLMs with Scorecard (right):

Simulated Testing Equivalencies between Self-Driving Cars & LLMs

Simulation-Based Testing for LLMs

The graph above illustrates a leveled approach to testing LLMs, inspired by the principles of simulation-based testing used in self-driving car development. This approach involves:

Building a twin: Creating a virtual copy of the LLM system under test.

Defining real-world user interactions: Identifying common user interactions with the LLM as test cases.

Introducing fuzzing: Generating variations of inputs based on the previously defined test cases to build a stronger test suite (called a Testset in Scorecard).

By combining these steps, we can create a comprehensive testing framework that evaluates the performance of LLMs in a robust and reliable way, and helps to identify and address potential issues before they arise - with a particular focus on edge cases.

Take your AI on the Road: Integrate Simulated Testing Today

As AI continues to evolve, developers of LLMs and self-driving cars will continue to find more common ground in their processes through simulation-based testing. The fundamentals of each domain are well established, the real opportunity for growth lies in the evaluation phase. Identifying new edge cases and facilitating rapid training and iteration will make all the difference when the rubber hits the road.

Building on the perspective of Greg Brockman, President & Co-Founder at OpenAI, we can go a step further: it’s not just evaluations you need, but simulated evaluations. Simulated evals are often all you need.

Level Up Your LLM Development with Scorecard: Beyond Evaluations to Simulations

Scorecard offers an effective way to measure performance and track model capabilities over time. By using an LLM evaluation tool like Scorecard, development teams can ensure that LLM models are not only performing well in standard evaluations but are also stress-tested through simulated scenarios, preparing them for diverse, real-world challenges.

Ready to take your LLM development to the next level? Sign up for Scorecard today at https://www.scorecard.io/.

References

Madrigal, Alexis C. 2017. “Inside Waymo's Secret World for Training Self-Driving Cars.” The Atlantic.https://www.theatlantic.com/technology/archive/2017/08/inside-waymos-secret-testing-and-simulation-facilities/537648

Seff, et.al. 2023. “MotionLM: Multi-Agent Motion Forecasting as Language Modeling” International Conference on Computer Vision (ICCV) 2023.